Singularity Systems: Zero to Hero

The Hacker's Guide to compiling PyTorch and CUDA

Singularity Systems: Zero to Hero is a direct follow up to

Karpathy's Neural Networks: Zero to Hero.

The textbook walks through, from scratch, how to build a CUDA C compiler

picocuda that compiles llm.c

and a PyTorch1 interpreter picograd that

evaluates nanogpt.py.

Prerequisites

- Software 1.0: Discrete Mathematics (Graph Combinatorics)

- Software 2.0: Continuous Mathematics (Tensor Calculus)

Contributing

The textbook and its compiler implementations are opensource. To conribute or

discuss, join the #singularity-systems work group in the

GPU Mode Discord.

Citation

@article{j4orz2025singsys,

author = "j4orz",

title = "Singularity Systems: Zero to Hero",

year = "2025",

url = "https://j4orz.ai/zero-to-hero/"

}

Dedication

I dedicate this textbook to my father, Thomas Zhang.

In 2023, he guided me as my plans for graduate school were changed to leading my sister's recovery from a severe diagnosis of schizophrenia. Shortly after, he had a stroke. I hope to make you proud, dad.

karpathy's course was seminal. -> influenced stanford to go "line by line from scratch" -> gen z calls it "line by line from scratch" -> procedural epistemology: "best way to teach it to a computer". <add_minsky_quote>

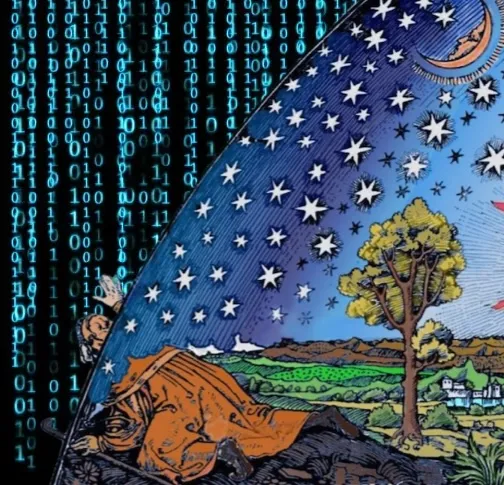

the art of computer programming -> the art of multiprocessor programming elements of euclid -> elements of X -> elements of programming neural networks: zero to hero -> singularity systems: zero to hero

sicp bridges the entire semantic gap from scheme to register machine. like sicp, you create gpt2. and then you create the compilers for gpt2.

humanity has discovered the use of a new language: tensors. just like karpathy translated these papers into textbook form.... attention paper. growing neural networks. internet fossil fuel...now reasoners. continual learning is the next frontier. i want to do the same for pt2, triton, thunderkittens, cutile. -> programming is a mathematical discipline. you need models, which is mathema. -> it used to be just discrete. but now you need continuous.KKk

AST + Kempe

Scalar Compilers (pcc/lcc)

In this chapter, we'll implement a simple compiler that translates C89 to RV32I.

First, we'll introduce the standard implementation plan for all compilers

which involves lifting the source code into a tree intermediate representation

(referred to as an abstract syntax tree) with a parser and lowering the

intermediate representation into target code with a code generator. Then we'll

implement the compiler incrementally for each language feature starting with a

language capable of arithmetic, then adding control flow and finally memory.

The next chapter builds on this one by

- updating the tree representation to a two-tiered graph (referred to as a control flow graph)

- taking the existing

parserandgeneratorand adding anoptimizerin between