Machine Learning Systems: A Programmer's Perspective

The hacker's guide to accelerated machine learning

Train nanogpt and nanochat with picograd: the bridge from micrograd to tinygrad

Implement your own distributed parallel compiler for differentiating high dimensional functions (from scratch)

by j4orz.

First Edition.

Preface

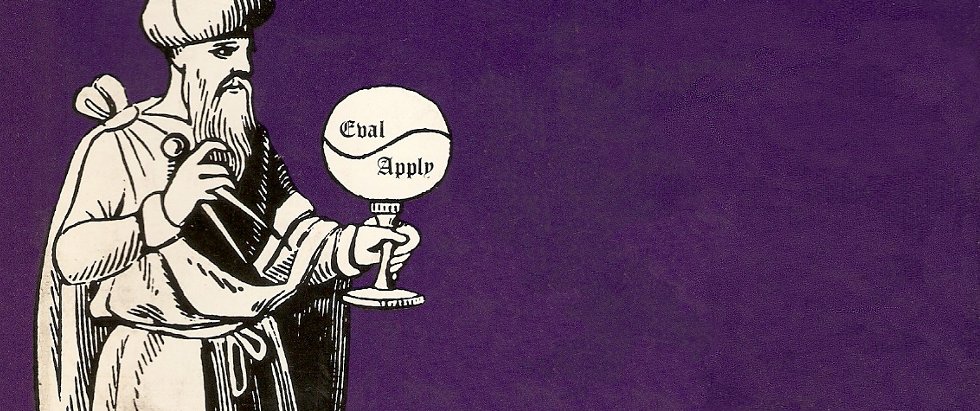

As a programmer and compiler writer, I became frustrated with how non-constructive and disjointed my learning experience was in the discipline of machine learning systems. I needed a single resource like SICP and it's spiritual successor PAPL which provided the Feynman-like counting-to-calculus progression, but for computation. In SICP and PAPL, you start with the elements of programming on a substitution model (i.e lambda calculus) iteratively deepening the semantics of computation with the stack and heap, and ending off with the implementation of your own virtual interpreter and a compiler to a physical register machine (i.e von neumann machine)

I needed a SICP for the era of software 2.0

The book is the answer to my original frustrations. It follows the SICP philosophy with a systems-bent, given how performance is especially a feature in the era of machine learning. If you feel similarly, you may benefit from it too. The Structure and Intepretation of Tensor Programs will take you from zero to hero in machine learning by implementing your own distributed parallel compiler for differentiating high-dimensional functions, line by line from scratch.

Good luck on your journey.

Are you ready to begin?

Introduction

Abstraction

As programmers, we are heirs to a great mathematical tradition. Be careful when you meet someone who claims one can program without math. Legend has it that Ageōmétrētos mēdeìs eisítō was inscribed above the entrance to Plato's Academy, which is greek for "let no one ignorant of geometry enter". One explanation for the Ancient Greek emphasis on geometry is very practical: civilization cannot exist without taxes, and keeping track of taxes requires the ability to measure the geometry of land and count the arithmetic of money. A geometer, which translates to "land measurer" was a crucial occupation when floods erased all markings in dirt which demarcated ownership and real estate.

However, another explanation for their emphasis on the geometry of shape over the arithmetic of number is their discovery of the incommensurable of the square root of 2. Because the square root of 2 was non-measurable with integers (irrational), the mathematicians of Ancient Greece decided to found the basis of arithmetic in shape, rather than the other was around. This is an explanation for why Euclid in his Elements provided an axiomatization of geometry instead of arithmetic, which the world had to wait 2000 years later for Giuseppe Peano's axiomaticization of arithmetic. As programmers, we share the same dream as the Ancient Greeks: to reduce the world to discrete natural numbers and in fact, two specific natural numbers of 0 and 1.

Not only do we share the same dream, but we also share the same language. This is because logic and computation are closely intertwined with one another. As programmers, we are heirs to a great mathematical tradition. When asked for the first programming languge, some might say of Fortran, Lisp, Algol, perhaps even APL. Some might say it was actually Ada Lovelace's pseudo formalization of natural language to program Babbage's analytical engine. But in some sense, the original compiler writer and programming language dates back to 2000 years ago. The name of the compiler writer, and the language, is Aristotle, and his logic.

Aristotle was a philosopher; a lover of wisdom. Kant, who was ten times more distant from Aristotle than we are from him, even held that nothing significant had been added to Aristotle’s views in the intervening two millennia. His works spanned anything and everything as he was a synoptic thinker who unified all aspects to his theory and thought. But as programmers, we are interested in his work with compiler construction, or the development of his logic — identifying the formal structures embedded in natural language which constitute valid reasoning — which mathematicians like Euclid used to program the first algorithms known to the world. Today, very few would try to maintain that Aristotle's logic is adequate as a basis modern logic or the type systems in programming languages. But scholars have come to view Aristotle with new respect, not so much for the correctness of his results but for the remarkable similarity in spirit between much of his work and modern logic.

People confuse the work of a logician with that of metaphysics of ontology or epistemology. That is, to answer the questions of what is reality, and what can we know to be true. But in reality, logicians are not concerned with what is "true" or not "true". What they are actually concerned are the properties of systems that people use to make valid inferences from premises to conclusions. The etymology of infer is inferre, which is Latin for "carrying forward" or "bringing in". The logician asks the question of when is it acceptable to carry forward or bring in a conclusion from a premise? This is not too different from the compiler writer not being concerned with the programs that the programmer writes, but the programming language itself.

TODO: inference. induction. problem of induction. sunrise. bayes theorom. generalization.

| Hypothesis | Observation | Rule |

|---|---|---|

| true | true | modus ponens |

| false | true | ex falso quodlibet |

| false | false | modus tollens |

| true | false | H \doesnotimply O |

Returning to the 21st century, classical programming languages can declare functions

up front in order to model discrete and deterministic mathematical objects like the

List, Tree, and Graph. But they do not experience the same success when

describing continuous and stochastic phenomena such as images and language.

In order to endow machines with human-level capabilities in image recognition for

self driving, or language modeling for assistants, functions must be recovered

from data. After your study, you will come to understand that

machine learning is the mechanization of inference.

In your hands lays the hacker's guide to high performance machine learning.

Machine learning is the pursuit of endowing machines with the ability to learn with induction.

How this is precisely done will become clear to you soon.

Assembly

You have quite a journey ahead of you.

Are you ready to begin?

Le Moyen Age et la Renaissance Paris. 1848-1859

Le Moyen Age et la Renaissance Paris. 1848-1859

1. Elements of Learning

A computational process is indeed much like a sorcerer's idea of a spirit. It cannot be seen or touched. It is not composed of matter at all. However, it is very real. It can perform intellectual work. It can answer questions. — Harold Abelson and Gerald Sussman

Contents

- 1.1 Regression with Least Squares

- 1.2 Matrix Multiplication on Serial Processors

- 1.3 Matrix Multiplication on Parallel Processors

- 1.4 Tensor Languages and Device Runtimes

- 1.5 Matrix Calculus for Optimization

- 1.6 Classification with Cross Entropy

- 1.7 Learning Representations

- 1.8 Generalized Linear Models

- 1.8 Learning Non-Linearities

1.1 Regression with Least Squares

- all the math and axiomaticizations

- distributions, high dimensional functions, optimization

import numpy

1.2 Matrix Multiply on Serial Processors

programmers: map abstraction to assembly. semantic gap. computation: orchestrating electrons.

As mentioned in the introduction, the Ancient Greeks were some of the first people to discover the beauty in mathematics and programming, even though they manually executed their algorithms such as Egyptian Multiplication and Euclidean GCD Algorithm by carrying out the calculation of instruction by hand. For the next 2000 years humanity would discover and invent new algorithms (like Newton's method, which will soon become our friend), but the execution remained a manual process for the profession of human "computers" until a group of German mathematicians found formal models of computation itself. Although these computational models have scary-sounding names of the lambda calculus, recursive functions, and turing machines, the simple yet powerful formal result is that all three are able to express one another, and thus the conjectured thesis that any algorithm can be computed by a turing machine (or equivalent computational model).

von Neumann architecture. mainframes, minis, micros c on decpdp11 green card ibm (intel/amd/arm cpu illusion still a pdp11) to green card risc operating systems and compilers were the first applications to use c. but in numerical computing, there was the BLAS. let's make matmul the fastest on CPU matmul is linear transformation, and linear transformation is matmul

def matmul(A,B):

raise NotImplementedError("todo")

if __name__ == "__main__":

N = 4096

matmul()

function matmul(A,B) {

throw new Error("todo");

}

fn matmul(x: Vec<f64>, y: Vec<f64>) -> Vec<f64> { todo!() } fn main() { let n = 4096; }

#include <cuda_runtime.h>

#include <stdio.h>

__global__ void addKernel(int *C, int *A, int *B) {

int tid = blockIdx.x * blockDim.x + threadIdx.x;

C[tid] = A[tid] + B[tid];

}

- correctness

- perf

1.3 Matrix Multiply on Parallel Processors

1.4 Tensor Languages, Device Runtimes

C99 (programming language) libc (standard library.) tensor, device

1.4 Matrix Calculus for Optimization

2. Deep Neural Networks

Contents

- 2.1 Sequence Learning with FFN

- 2.2 Differentiation

- 2.3 Symbolic Differentiation

- 2.4 Numeric Differentiation

- 2.5 Automatic Differentiation

- 2.6 Stochastic Gradient Descent

- 2.7 Adam

- 2.8 Feedforward Neural Network

- 2.9 Recurrent Neural Network

- 2.10 Transformers

- 2.11 Mixture of Experts

4.1 Learning Sequences

4.2 Feedforward Neural Networks

4.3 Recurrent Neural Networks

4.4 Transformers

4.5 Mixture of Experts

3. Scaling Networks

Library of Alexandria by O. Von Corven. 19th century.

Library of Alexandria by O. Von Corven. 19th century.

Afterword

To continue deepening your knowledge, the following courses are also a good next step. Once you feel comfortable, you should graduate towards contributing to tinygrad, an autograd with 20k lines of code. Good luck on your journey. I'll see you at work.

Tensor Programming

- Cambridge: Information Theory, Pattern Recognition and Neural Networks by David Mackay

- Tubingen ML4202: Probabilistic Machine Learning

- Stanford CS109: Probability for Computer Scientists by Chris Piech

- Stanford CS124: From Languages to Information by Dan Jurafsky

- Stanford CS229: Machine Learning by Andrew Ng

- Stanford CS230: Deep Learning by Andrew Ng

- Stanford CS224N: NLP with Deep Learning by Christopher Manning

- Stanford NNZH: Neural Networks Zero to Hero by Andrej Karpathy

- Stanford CS336: Language Modeling from Scratch by Percy Liang

Tensor Interpretation

- Princeton COS302: Mathematics for Machine Learning by Ryan Adams

- UPenn STAT 4830: Numerical Optimization for Machine Learning by Damek Davis

- Brown CS053: Coding the Matrix by Philip Klein

- MIT 18.S096: Matrix Calculus by Alan Edelman and Steven Johnson

- MIT 18.335J: Numerical Methods by Steven Johnson

- MIT 6.172: Performance Engineering by Charles Leiserson and Julian Shun

- MIT 6.S894: Accelerated Computing by Jonathan Ragan-Kelley

- Stanford CS149: Parallel Computing by Kayvon Fatahalian

- Berkeley CS267: Applications of Parallel Computers by Katthie Yellick

- Carnegie Mellon 18-447: Computer Architecture by Onur Mutlu

- Carnegie Mellon 18-742: Parallel Computer Architecture by Onur Mutlu

- ETH 227: Programming Heterogeneous Computing Systems with GPUs by Onur Mutlu

Tensor Compilation

- Berkeley CS265: Compiler Optimization by Max Willsey

- Cornell CS4120: Compilers by Andrew Myers

- Cornell CS6120: Advanced by Adrian Sampson

- Cornell CS4787: Principles of Large-Scale Machine Learning by Chris De Sa

- Cornell CS6787: Advanced Machine Learning Systems by Chris De Sa

- Carnegie Mellon 15-411: Compiler Design by Frank Pfenning

- Carnegie Mellon 15-745: Optimizing Compilers by Phil Gibbons

- Rice COMP412: Compiler Construction by Keith Cooper

- Rice COMP512: Advanced Compiler Construction by Keith Cooper

Appendix

The only prerequisite assumed is the ability to program. In SITP we use

- Python: because its syntactic similarity with natural language making it the lingua franca of ML

- Rust: because of the similarity in semantics of typed functional programming with mathematics

If you are unfamiliar with either of these languages, the following is a quick overview of a functional subset of their syntax.

Python

Rust

Bibliography

- Abadi, Martín, Paul Barham, Jianmin Chen, Zhifeng Chen, Andy Davis, Jeffrey Dean, Matthieu Devin, et al. 2016. “TensorFlow: A System for Large-Scale Machine Learning,” May. https://arxiv.org/abs/1605.08695.

- Agrawal, Akshay, Akshay Naresh Modi, Alexandre Passos, Allen Lavoie, Ashish Agarwal, Asim Shankar, Igor Ganichev, et al. 2019. “TensorFlow Eager: A Multi-Stage, Python-Embedded DSL for Machine Learning” ArXiv.org

- Ansel, Jason, Edward Yang, Horace He, Natalia Gimelshein, Animesh Jain, Michael Voznesensky, Bin Bao, et al. 2024. “PyTorch 2: Faster Machine Learning through Dynamic Python Bytecode Transformation and Graph Compilation.” ACM, April.

- Abelson, Harold. 1996. Structure and Interpretation of Computer Programs, Second Edition. MIT Press.

- Aho, Alfred V, Monica S Lam, Ravi Sethi, and Jeffrey D Ullman. 2015. Compilers: Principles, Techniques, & Tools. Pearson.

- Aggarwal, Charu C. 2020. Linear Algebra and Optimization for Machine Learning. Springer.

- Bastien, Frédéric, Pascal Lamblin, Razvan Pascanu, James Bergstra, Ian Goodfellow, Arnaud Bergeron, Nicolas Bouchard, David Warde-Farley, and Yoshua Bengio. 2025. “Theano: New Features and Speed Improvements.” ArXiv.org

- Baydin, Atilim Gunes, Barak A Pearlmutter, Radul, Alexey Andreyevich, and Jeffrey Mark Siskind. 2015. “Automatic Differentiation in Machine Learning: A Survey.” ArXiv.org

- Bright, Paige, Alan Edelman, and Steven G Johnson. 2025. “Matrix Calculus (for Machine Learning and Beyond).” ArXiv.org

- Blondel, Mathieu, and Vincent Roulet. 2024. “The Elements of Differentiable Programming.” ArXiv.org

- Chen, Tianqi, Thierry Moreau, Ziheng Jiang, Lianmin Zheng, Eddie Yan, Meghan Cowan, Haichen Shen, et al. 2018. “TVM: An Automated End-To-End Optimizing Compiler for Deep Learning.” ArXiv, February.

- Cho, Kyunghyun. 2025. “Machine Learning: A Lecture Note.” ArXiv.org

- Cooper, Keith D, and Linda Torczon. 2022. Engineering a Compiler. Morgan Kaufmann.

- Cormen, Thomas H, Charles Eric Leiserson, Ronald L Rivest, and Clifford Stein. 2009. Introduction to Algorithms. MIT Press.

- Frostig, Roy, Google Brain, Matthew Johnson, and Chris Leary Google. n.d. “Compiling Machine Learning Programs via High-Level Tracing.”

- Goodfellow, Ian, Yoshua Bengio, and Aaron Courville. 2016. Deep Learning. Cambridge, Massachusetts: The MIT Press.

- Griewank, Andreas, and Andrea Walther. 2009. Evaluating Derivatives: Principles and Techniques of Algorithmic Differentiation. Philadelphia, Pa.: Society For Industrial & Applied Mathematics ; Cambridge.

- Hack, Sebastian. 2007. Register Allocation for Programs in SSA Form.

- Harris, Charles R., K. Jarrod Millman, Stéfan J. van der Walt, Ralf Gommers, Pauli Virtanen, David Cournapeau, Eric Wieser, et al. 2020. “Array Programming with NumPy.” Nature 585 (7825): 357–62.

- Harris, Sarah. 2021. Digital Design and Computer Architecture: RISC-V Edition. S.L.: Morgan Kaufmann Publisher.

- Hastie, Trevor, Robert Tibshirani, and Jerome Friedman. 2009. The Elements of Statistical Learning, Second Edition : Data Mining, Inference, and Prediction. 2nd ed. New York: Springer.

- Hennessy, John L, and David A Patterson. 2025. Computer Architecture: A Quantitative Approach. Cambridge, Ma: Morgan Kaufmann.

- Hwu, Wen-Mei W, David B. Kirk, and Izzat El Hajj. 2022. Programming Massively Parallel Processors: A Hands-on Approach. S.L.: Morgan Kaufmann.

- Jia, Yangqing, Evan Shelhamer, Jeff Donahue, Sergey Karayev, Jonathan Long, Ross Girshick, Sergio Guadarrama, and Trevor Darrell. 2014. “Caffe: Convolutional Architecture for Fast Feature Embedding.” ArXiv.org

- Jurafsky, Dan , and James H. Martin. 2025. “Speech and Language Processing.” Stanford.edu. 2025.

- Kang, Wanmo, and Kyunghyun Cho. 2025. “Linear Algebra for Data Science.” 2025.

- Klein, Philip N. 2013. Coding the Matrix: Linear Algebra through Applications to Computer Science. Newton, Mass: Newtonian Press.

- Krishnamurthi, Shriram. 2025. “Programming Languages: Application and Interpretation.” 2025.

- Lattner, Chris, Mehdi Amini, Uday Bondhugula, Albert Cohen, Andy Davis, Jacques Pienaar, River Riddle, Tatiana Shpeisman, Nicolas Vasilache, and Oleksandr Zinenko. 2020. “MLIR: A Compiler Infrastructure for the End of Moore’s Law.” ArXiv:2002.11054

- Lay, David C, Steven R Lay, and Judith Mcdonald. 2016. Linear Algebra and Its Applications. Boston: Pearson.

- Mackay, David J C. 2003. Information Theory, Inference, and Learning Algorithms. Cambridge: Cambridge University Press.

- Møller, Anders, and Michael I Schwartzbach. 2024. “Static Program Analysis.” Cs.au.dk. 2024.

- Murphy, Kevin P. 2023. Probabilistic Machine Learning: Advanced Concepts. MIT Press.

- Murphy, Kevin P. 2022. Probabilistic Machine Learning: An Introduction. Cambridge: MIT Press.

- Ng, Andrew, and Tengyu Ma. 2023. CS229 Lecture Notes.

- Paszke, Adam, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, et al. 2019. “PyTorch: An Imperative Style, High-Performance Deep Learning Library.” ArXiv.org

- Ragan-Kelley, Jonathan, Connelly Barnes, Andrew Adams, Sylvain Paris, Frédo Durand, and Saman Amarasinghe. 2013. “Halide.” Proceedings of the 34th ACM SIGPLAN Conference on Programming Language Design and Implementation.

- Rastello, Fabrice, and Florent Bouchez Tichadou. 2022. SSA-Based Compiler Design. Springer Nature.

- Saeta, Brennan, Denys Shabalin, Marc Rasi, Brad Larson, Xihui Wu, Parker Schuh, Michelle Casbon, et al. 2021. “Swift for TensorFlow: A Portable, Flexible Platform for Deep Learning.” ArXiv.or

- Scardapane, Simone. 2025. “Alice’s Adventures in a Differentiable Wonderland.” ArXiv.org

- Suhan, Alex, Davide Libenzi, Ailing Zhang, Parker Schuh, Brennan Saeta, Jie Young Sohn, and Denys Shabalin. 2021. “LazyTensor: Combining Eager Execution with Domain-Specific Compilers.” ArXiv.org

- Spector, Benjamin F, Simran Arora, Aaryan Singhal, Daniel Y Fu, and Christopher Ré. 2024. “ThunderKittens: Simple, Fast, and Adorable AI Kernels.” ArXiv.org

- Stepanov, Alexander A, and Daniel E Rose. 2015. From Mathematics to Generic Programming. Upper Saddle River, Nj: Addison-Wesley.

- Stepanov, Alexander, and Paul McJones. 2019. Elements of Programming. Semigroup Press.

- Tarjan, Robert E. 1988. Data Structures and Network Algorithms. Philadelphia: Society For Industrial And Applied Mathematics.

- Tokui, Seiya, Ryosuke Okuta, Takuya Akiba, Yusuke Niitani, Toru Ogawa, Shunta Saito, Shuji Suzuki, Kota Uenishi, Brian Vogel, and Hiroyuki Yamazaki Vincent. 2019. “Chainer: A Deep Learning Framework for Accelerating the Research Cycle.” ArXiv.org

- Tillet, Philippe, Hsiang-Tsung Kung, and David G Cox. 2019. “Triton: An Intermediate Language and Compiler for Tiled Neural Network Computations.” ACM.

- Trefethen, Lloyd N, and David Bau. 1997. Numerical Linear Algebra. SIAM.

- Uwe Naumann. 2012. The Art of Differentiating Computer Programs: An Introduction to Algorithmic Differentiation. Philadelphia: Society For Industrial And Applied Mathematics.